This was a project from my time at Thinkwell Group. It was the project I worked on that was probably the one that mixed the most interesting technologies: Unity, C#, C++, OpenCV, Arduino, OSC, Beaglebone (not Raspberry Pi), ultrasonic sensor, infrared cameras and infrared light projectors, all to create one seamless experience.

The goal of the task

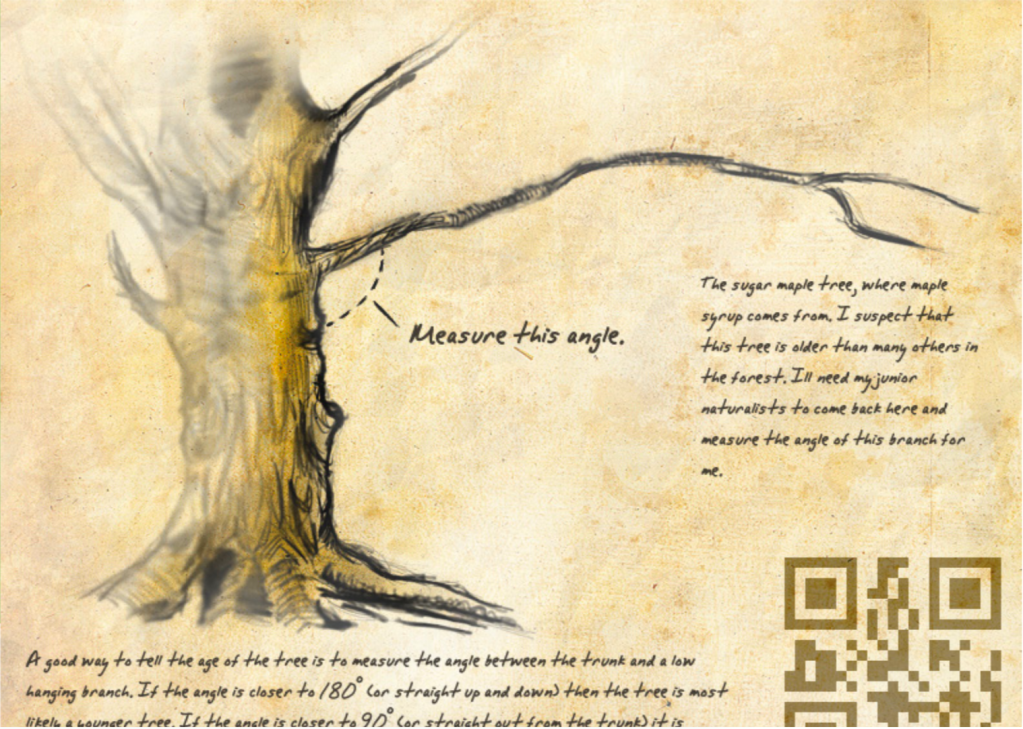

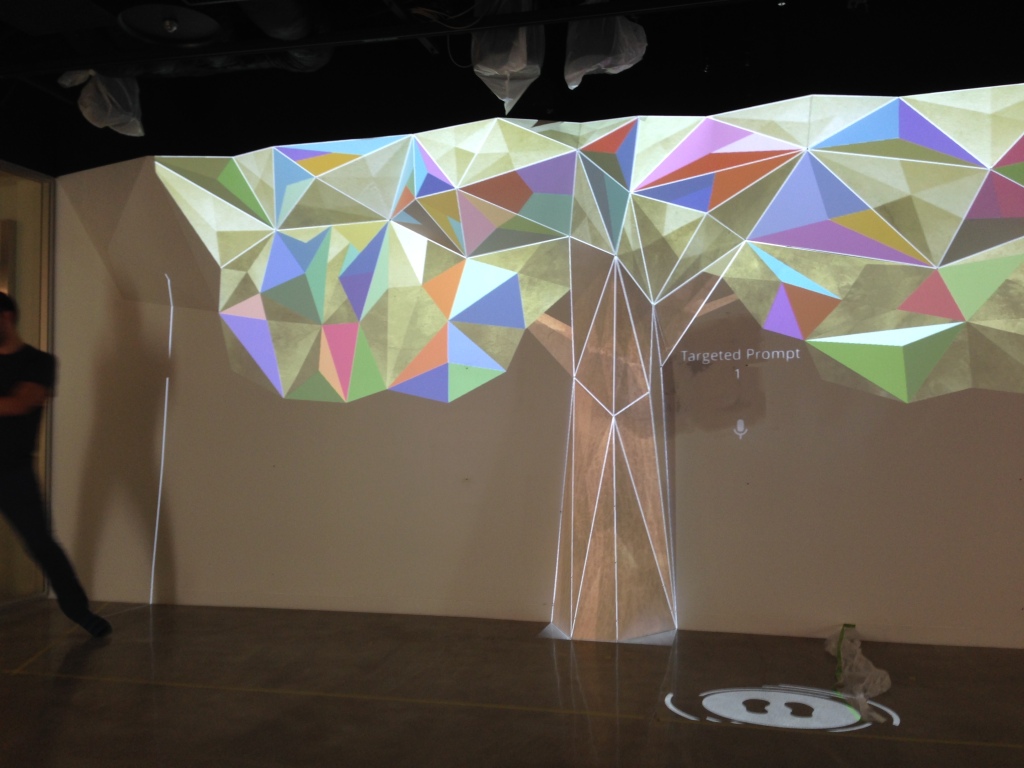

The given user journey was for a tree that dispensed knowledge. The user was to be able to stand in front of the tree and ask questions and the tree would answer. We knew that guests would come as a group. Immediately, I thought, if the guests would come as a group, it would be a pleasant feeling to simulate an airflow and that Unity, with its physics simulation, should be able to do that. It ended up being one of the defining features of our experience.

The tree went through many variations and a lot of testing. It is, as far as I know, currently it the offices of Google Tokyo. This is a description of what the final version was as late as 2016.

Asking

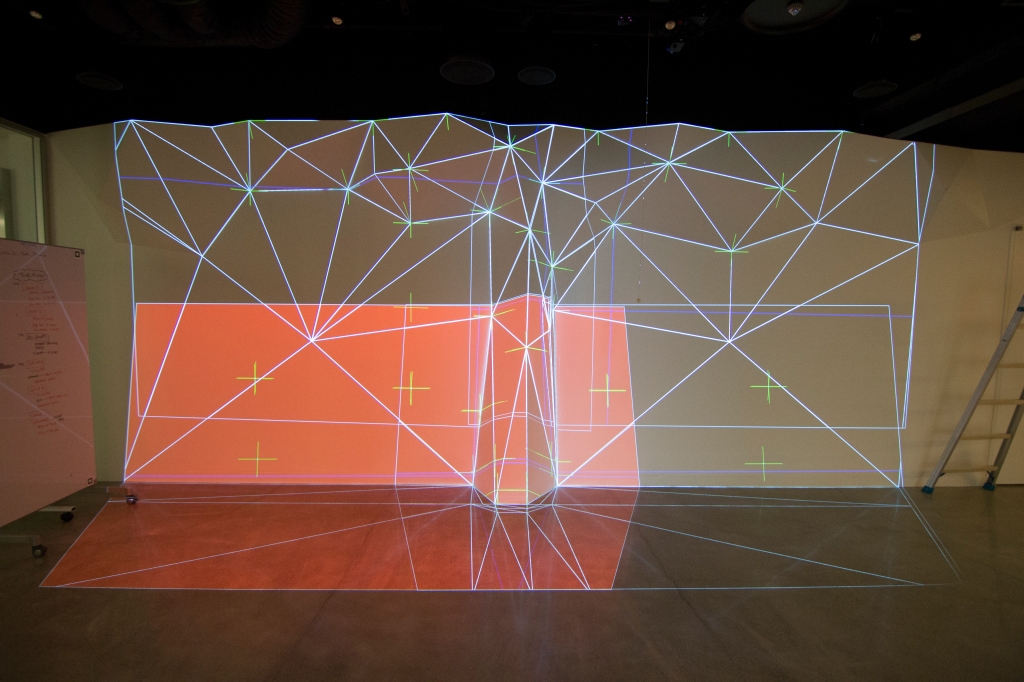

When the guests approach the tree, it is just a stylized tree, with ambient sound and music (written by Thadeus Reed) playing. The wall is shaped like the 3D image itself. There is a shape projected onto the floor inviting guests to come forward in a nonverbal way. This is an image of the tree with testing lines in the Tokyo building:

Here’s a wider angled view, showing the lobby itself:

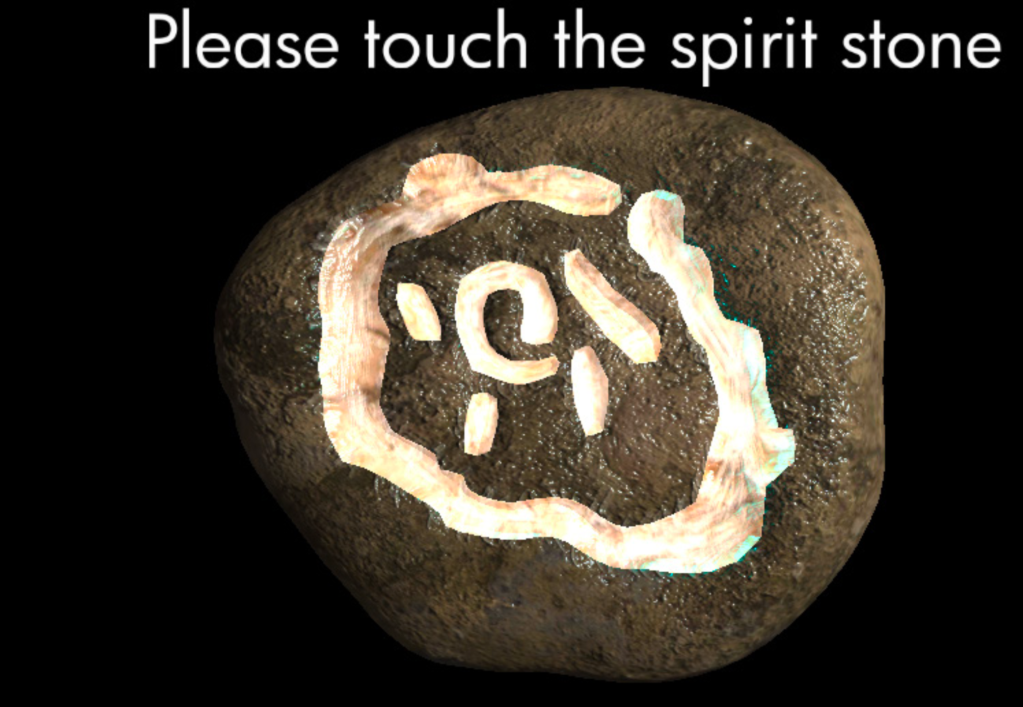

When a guests stands the project circle, they are detected by a ultrasonic sensor mounted on an Arduino board. The sensor just communicates how far the object in front of it is. Usually, it’s the floor, but when someone steps on the circle, the distance gets much shorter. The Arduino board is connected to a Beaglebone system-on-chip computer that runs an extremely simple program that just forwards this information via OSC to Unity. When Unity detects that value has gotten beneath a given threshold, it can safely assume that someone is standing on the circle and the shape of the projected circle changes. Then they are explicitly asked on the wall in front of them: “Please tell me what image you want to see”, either in English or in Japanese. This eventually got changed to sequence of more specific questions to keep the guests engaged. There’s also a logo that explicitly tells them that the tree is in audio capture mode or in effect, listening.

Seamless input, to an extent

Then the guest speaks to the tree. That ended up being a lot more trickier than we expected. Among the many unexpected situations were:

- How should the system react if the guest steps away before the search is complete? (This happened almost every time, even though we assumed it wouldn’t.)

- What should happen if the guest changes their mind in the middle of a query?

- What should happen if the guest does not understand what is expected of them?

- What should be done if none of the guests step up to the tree? (Should someone from the building invite them to?)

When the guest does make their query, the words are transcribed to text in real time thanks to a Google speech recognition system. We had a hard time testing it in Los Angeles because it was surprisingly difficult to find Japanese speakers. When we did get to Tokyo, the microphone installation that we had used that was chosen to be completely invisible had an echo. I remember it was a big problem, but since I’m not an audio person, I don’t remember all the details.

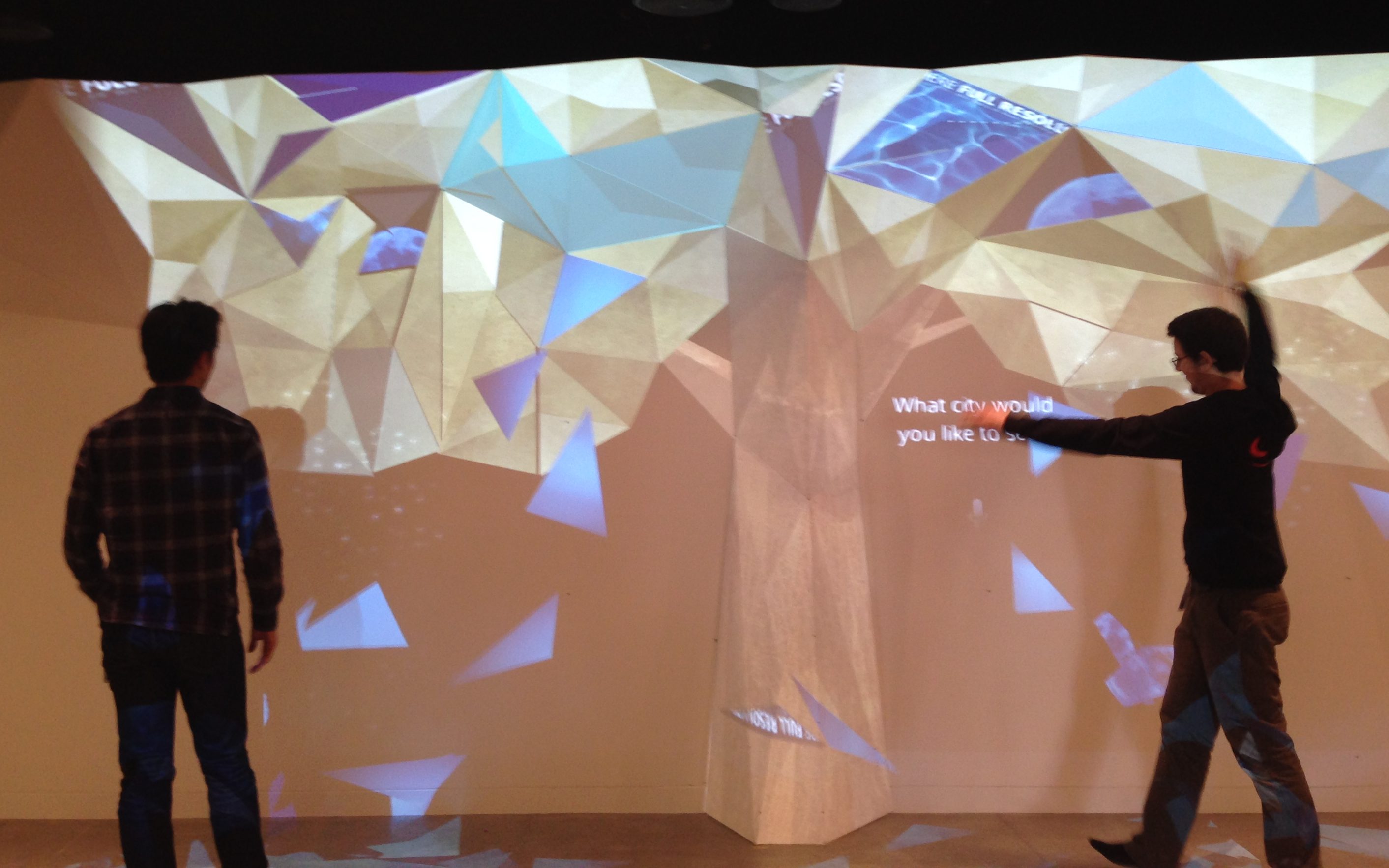

Here’s a tester making a request to the tree. Notice how the pattern on the floor has changed:

What I do remember is that the guests were split into four categories: male English speaking guests, female English speaking guests, male Japanese speaking guests, and finally female Japanese-speaking guests. It was very difficult for the system to understand the last of those. Women Japanese speakers did not always get their words recognized. Of course, they were category that had been the least tested in Los Angeles.

Answers

Once a request is made, it is sent to the Google Images API via Unity. Some of leaves change their textures to the top ten results. The leaves would always flutter (not really, more about that later) in the airflow caused by the guests walking by them, but from that point on, if the guests walk by them too quickly, the leaves can actually fall down and flutter downwards, still influenced by the airflow of the guests’ motion.

Here is the tree in Tokyo showing flowers:

The original user journey had the guests being able to kick the leaves when they were on the ground. Unfortunately, that was not possible. the problem was that the leaves projected on ground came from projectors on the ceiling and so when guests leaned forward to look at them, the leaves were hidden by the guests shadow. Also, our system was not good at detecting specific motions of depth.

Here’s Ellie from Google demonstrating the query feature.

Airflow

When the leaves have changed to images, the modified leaves respond to people moving in front of the tree as if a virtual airflow were created by their motion.

Here are testers moving by the tree to make the leaves fall:

Here’s Ellie from Google demonstrating the airflow feature.

To do this, the wall is filmed with an infrared camera. The camera has to be infrared, otherwise the projection itself would be filmed, creating an infinite loop. With an infrared camera, we project infrared light and we only see the guests themselves and their motion. We then feed these images to OpenCV and analyze the with the Farneback optical flow algorithm using C++. This produces a vector matrix that tells us exactly how much the content of each image has shifted between two frames, in other words, what the airflow is. This is then sent to Unity using C#’s marshalling abilities, basically, sharing a C array between C# and C++. We then adapt the raw data in the way explained by Ellie above so that the leaves respond to the motion in a more cartoony way. If we just followed real-life physics, the leaves would barely respond to our motion at all.

Flutter

Before falling down, the leaves flutter as the guests move by. I could never get the leaves to flutter correctly. Then, a teammate had a better idea: just move the leaves’ normals around. It worked extremely well. That made the light bounce around the leaves as if they were fluttering without having to find the right motion. The edges didn’t move, but in this case, that was a good thing because they needed to keep their overall assembled shape. It was an elegantly simple solution to what could have been a very messy problem.

3D wall

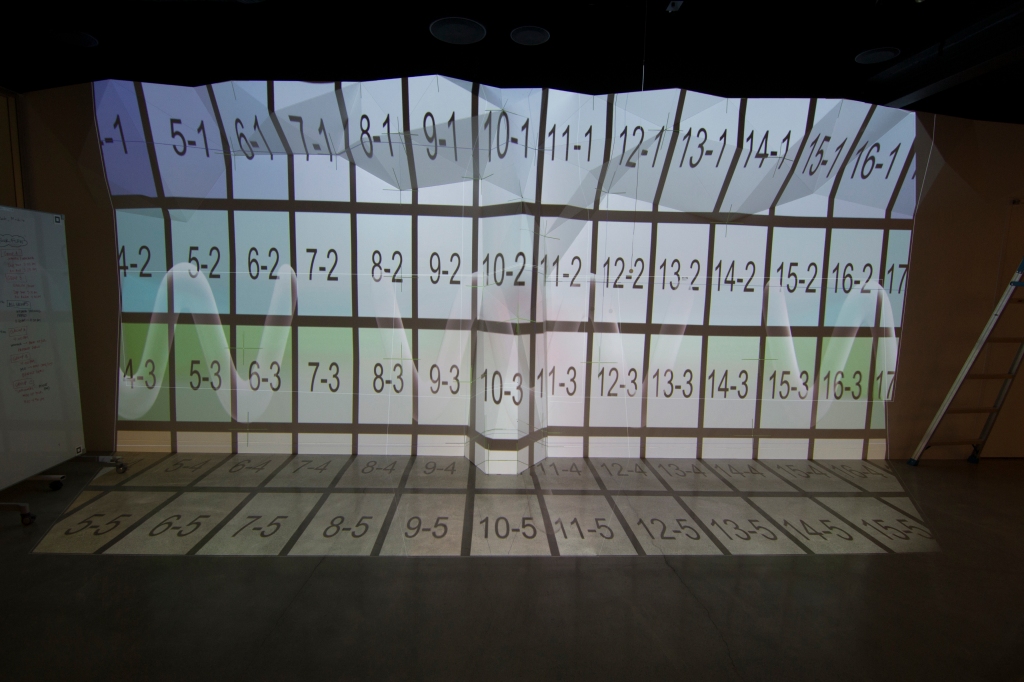

It shows that the wall the tree was projected was shaped to look like the wall of the projection. This was a nice idea in theory. But in practice, it means that the projection and the physical wall would always shift away from each other after a short while in real life. To keep them lined up, a maintenance system was set up. This is what it looked like:

Conclusion

The project was a creative challenge and very rewarding. It was definitely an unusual use of the Unity game engine and a mix of technologies that don’t come together often. Still, we did manage to make a smooth, seamless experience in the end, that welcomes everyone at the Google Partner Plex Tokyo.